AiCatcher

Extending machine intelligence into the wild

Aicatcher

Extending machine intelligence

into the wild

2019

5 weeks

Awards

Fast Company World Changing Ideas:

Finalist (Student)

Honourable Mention (Experimental)

Core77 Design Awards

Runner up (Interaction)

Aicatcher is an intelligent camera trap for conservationists and forest officials that brings computer vision and machine intelligence into the wild. It accurately and non-invasively identifies animals and alerts officials on their whereabouts. The camera trap can also be programmed to identify unauthorised people in forest reserves and notify officials for faster action to counteract poaching

Context

In 2018, even as the tiger census across India was underway, over 100 tigers were found dead. Electrocuted, poisoned, poached or strayed into highways and towns. These cases are not limited to tigers alone. Leopards, elephants, pangolins, and other scheduled species have been threatened. Poaching, habitat loss, and conflict with human has strained our relationship with wildlife and we must design more inclusive responses to improve it.

Aicatcher was inspired by conversations with researchers and conservationists on the field, whose experiences led to a system that supports their role as crucial influencers in the community. They currently depend on camera traps deployed in the forest to study wildlife. These research tools have become a critical part of understanding animal habitat, and population. Aicatcher uses Edge AI to reimagine the camera trap as a proactive monitoring aid.

How it works

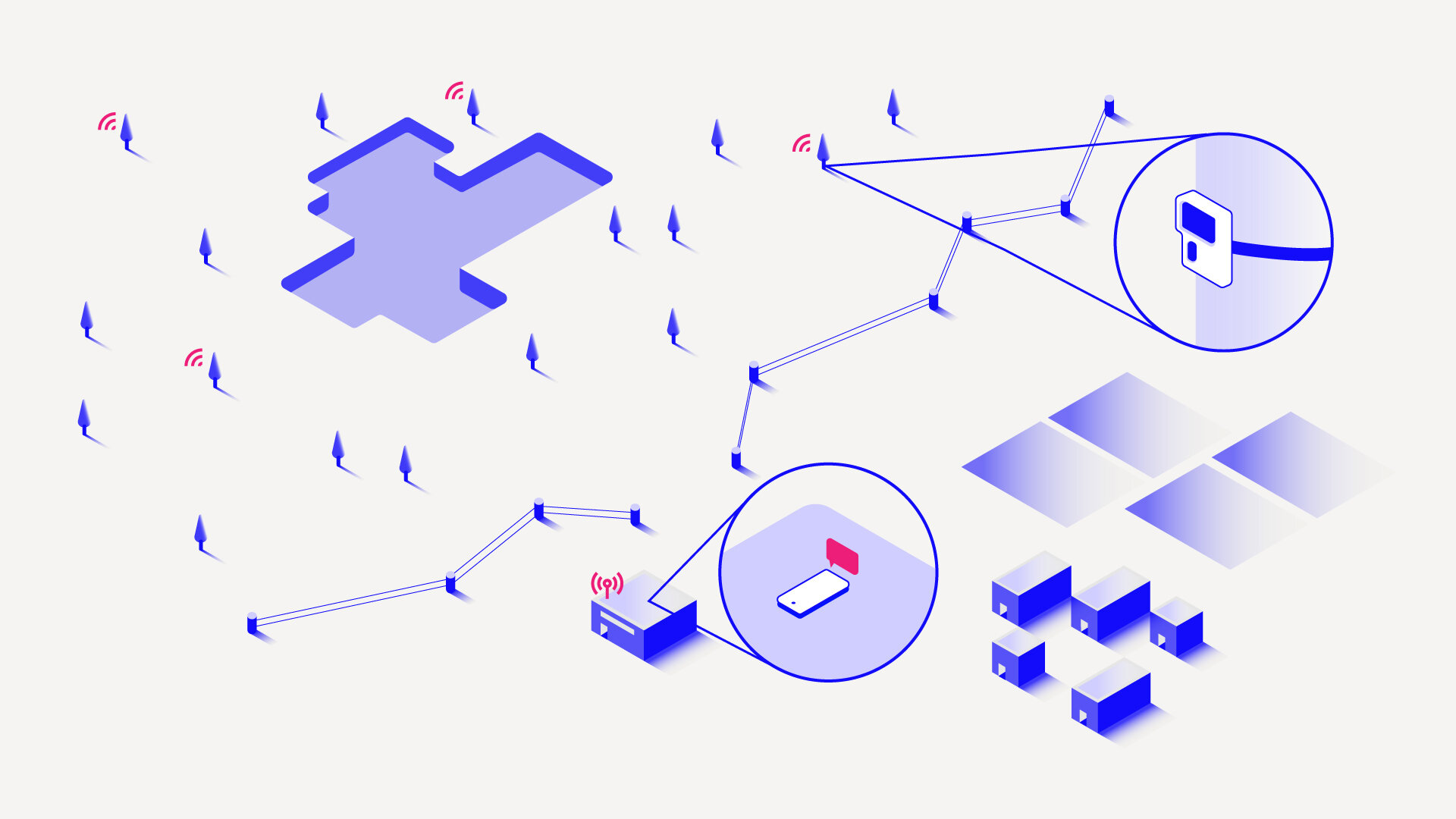

AiCatcher camera traps are deployed in strategic forest areas close to human settlements, electric fencing or frequently visited areas known to contain prey or water. If an animal triggers its motion and thermal sensor, multiple images and associated data are captured and saved. Simultaneously the computer vision algorithm identifies the animal and sends a notification to officials; who can then act to alert locals and prevent needless encounters.

If an animal is not recognised with high confidence, its images are stored to expand its training database. Overtime, this system reduces false positives and improves the quality and accuracy of captured images and collected data. This data goes toward making better captive breeding programs, and rehabilitation.

AiCatcher does not require an internet connection and uses long range radio signals to communicate. Depending on the geography, different models can be trained to identify a combination of animals. The current prototype can identify tigers, leopards and elephants with more animals being added.

A prototype was tested in Bannerghatta National Park in India. A tiger was spotted and a notification was received via text message. It is now being developed further in collaboration with the Nature Conservation Foundation as a rapid response system to avoid human-elephant conflict in Hassan, Karnataka.

While networked AI analyse and help solve big problems from the top down, smaller Edge AI work from the bottom up to help connect the dots on the ground. In wildlife conservation, networked AI is currently being used to analyse huge amounts of wildlife data to understand larger patterns, long term trends in behaviour and climate. However it will be Edge AI deployed into forests that will make the day-to-day jobs of the people in the forests and on the field easier.

Making & Learning

AiCatcher is built using Tensorflow, Google’s AIY development kit, and uses LoRa, to communicate. LoRa Signals are converted using Python.

Multiple experiments were carried out by training a machine learning model to recognise tigers, leopards, giraffes, people and empty forests. At least 9000 images for each animal formed the training dataset from existing camera trap databases. These experiments also gave me a brief glimpse into the opportunities and drawbacks of machine learning.

Exp 1: Identifying individual Animals

Built using ImageAI and the Snapshot Serengheti dataset. Learned about object recognition and image classification models. Image classification helps verify if a given target or object is present in the visual or not. Object recognition helps identify where the target or object it might be within the visual. Trained locally. Not recommended at all. It is really processing heavy, and can take a toll on the computer.

Exp 2: Camouflage and experiencing bias

Used MobileNet and Google AIY. Trained to detect the presence of tigers and leopards in an image. Trained using the GBIF Dataset. This experiment also showed me the inaccuracies and “biases” that can occur while training for visually similar objects. The volume of data for the tiger far exceeded that of the leopard, and as a result, the model often mistook leopards for tigers, and its confidence increased only when the leopard occupied a dominant size of the visual. On the other hand, it was able to detect tigers even in camouflage. but many questions remained on its legitimacy because of the flaws while training.

Exp 3: More Biases and moving targets

Camera traps capture a lot of false positives. So, to understand if the model can actually detect tigers and leopards hidden in plain sight, I divided the dataset into 3 categories tigers, Leopards and empty forests from existing camera trap image data. False positives were classified as empty forests. The volume of tiger to leopard data was kept the same. The result demonstrated that the model can discern the animal from its environment, and when shown a video, it changes its “mind” as we get closer to the leopard.

Exp 4: Sending LoRa Messages & receiving an SMS

On the communication processing side, I used two Adafruit LoRa Bonnets configured to a LoRaWAN network, to transmit and receive data. The receiver converts incoming signals into SMSs using twilio.